1. Apache Spark™ is a fast and general engine for large-scale data processing.

Apache Spark has an advanced DAG execution engine that supports cyclic data flow and in-memory computing.

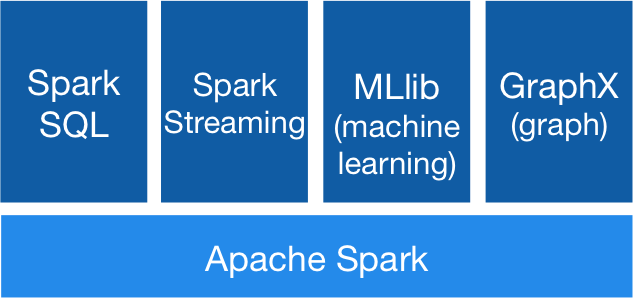

It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming.

2. We should look at Spark as an alternative to Hadoop MapReduce rather than a replacement to Hadoop.

Spark Ecosystem

Spark takes MapReduce to the next level with less expensive shuffles in the data processing. With capabilities like in-memory data storage and near real-time processing, the performance can be several times faster than other big data technologies.

Spark also supports lazy evaluation of big data queries, which helps with optimization of the steps in data processing workflows. Spark holds intermediate results in memory rather than writing them to disk which is very useful especially when you need to work on the same dataset multiple times. It’s designed to be an execution engine that works both in-memory and on-disk.

Other than Spark Core API, there are additional libraries that are part of the Spark ecosystem and provide additional capabilities in Big Data analytics and Machine Learning areas.

These libraries include:

- Spark SQL:

- Spark SQL provides the capability to expose the Spark datasets over JDBC API and allow running the SQL like queries on Spark data using traditional BI and visualization tools. Spark SQL allows the users to ETL their data from different formats it’s currently in (like JSON, Parquet, a Database), transform it, and expose it for ad-hoc querying.

- Spark Streaming:

- Spark Streaming can be used for processing the real-time streaming data. This is based on micro batch style of computing and processing. It uses the DStream which is basically a series of RDDs, to process the real-time data.

- Spark MLlib:

- MLlib is Spark’s scalable machine learning library consisting of common learning algorithms and utilities, including classification, regression, clustering, collaborative filtering, dimensionality reduction, as well as underlying optimization primitives.

- Spark GraphX:

- GraphX is the new (alpha) Spark API for graphs and graph-parallel computation. At a high level, GraphX extends the Spark RDD by introducing the Resilient Distributed Property Graph: a directed multi-graph with properties attached to each vertex and edge. To support graph computation, GraphX exposes a set of fundamental operators (e.g., subgraph, joinVertices, and aggregateMessages) as well as an optimized variant of the Pregel API. In addition, GraphX includes a growing collection of graph algorithms and builders to simplify graph analytics tasks.

nice unique Stuff, please provide more Stuff, am beginner to learn:

ReplyDeleteBest Online Training Institute in Chennai | Best Software Training Institute in Chennai

I am spark trainer in Hyderabad, i agree all these info, but mllib, rdd, graphx little old internally using rdd, but dataframe, dataset, mlpipe and graphframes very powerful now a days its hotcake in spark.

ReplyDeletehii

ReplyDeleteff

ReplyDeleteHey, thanks for the blog article.Really thank you! Great

ReplyDeletedata science course in Hyderabad

Thanks for sharing this blog very informative and useful content.

ReplyDeletedata science course in Hyderabad

Very clear and helpful introduction to Apache Spark The explanation makes it easy to understand the core concepts and uses. Thanks for sharing this useful Spark overview.

ReplyDeletee sim usa prepaid