What is Apache Flume?

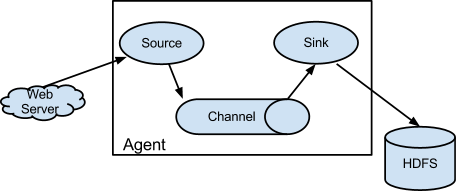

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. It has a simple and flexible architecture based on streaming data flows. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. It uses a simple extensible data model that allows for online analytic application.

Flume is a distributed, reliable, and available system for efficiently collecting, aggregating, and moving large amounts of data from many different sources to a centralized data store, such as HDFS or HBase. It is more tightly integrated with Hadoop ecosystem. For example, the flume HDFS sink integrates with the HDFS security very well. So its common use case is to act as a data pipeline to ingest data into Hadoop.

What is Apache Kafka?

Apache Kafka is publish-subscribe messaging rethought as a distributed commit log. A high-throughput distributed messaging system.

Kafka is a general purpose publish-subscribe model messaging system, which offers strong durability, scalability and fault-tolerance support. It is not specifically designed for Hadoop. Hadoop ecosystem is just be one of its possible consumers.

Kafka Vs Flume

Compared to Flume, Kafka wins on the its superb scalability and messsage durablity.

Kafka is very scalable. One of the key benefits of Kafka is that it is very easy to add large number of consumers without affecting performance and without down time. That’s because Kafka does not track which messages in the topic have been consumed by consumers. It simply keeps all messages in the topic within a configurable period. It is the consumers’ responsibility to do the tracking through offset.

In contrast, adding more consumers to Flume means changing the topology of Flume pipeline design, replicating the channel to deliver the messages to a new sink. It is not really a scalable solution when you have huge number of consumers. Also since the flume topology needs to be changed, it requires some down time.

Kafka’s scalability is also demonstrated by its ability to handle spike of the events. This is where Kakfa truly shines because it acts as a “shock absorber” between the producers and consumers. Kafka can handle events at 100k+ per second rate coming from producers. Because Kafka consumers pull data from the topic, different consumers can consume the messages at different pace. Kafka also supports different consumption model. You can have one consumer processing the messages at real-time and another consumer processing the messages in batch mode.

Amazing information. Thanks for shearing with us. I am looking for more details about Multi-Purpose Flume Manufacturer and Supplier

ReplyDelete